Under the Hood Series: Hunters’ Open XDR Approach to Flexible Data Ingestion

by Ido Trumer, Data Engineer and Dvir Sayag, Cyber Research Content Lead

Aug 19, 2021Under the Hood Series: Hunters' Open XDR Approach to Flexible Data Ingestion

Welcome to Hunters’ second blog post of the “Open XDR - Under the Hood” series, which focuses on Flexible Ingestion: its importance, how it works in Hunters XDR, and how the heavy-lifting of data engineering is tackled in order to be able to ingest data at scale and make it usable. Flexible Ingestion is a mandatory, data-driven requirement, and a pillar that strongly differentiates Open XDRs from single-stack ones. Open XDRs connect to security telemetry from a variety of security tools and vendors, both on-premises and in the cloud.

With Hunters XDR, security teams have the ability to seamlessly ingest different types of security vendors via the product’s portal, removing the burden of data engineering. In order to do so, customers can either use their own data lake or use Hunters’ data lake based on Snowflake to host the ingested data.

Data Ingestion in the Hunters' Portal

Hunters XDR truly employs a complete approach to data, connecting to all security telemetry sources, and maintaining accessibility to the data for investigation. It all begins with the raw data: ingesting multiple data sources from an API to integrate directly to security tool feeds, S3 buckets, SIEM solutions or a data lake.

Hunters XDR supports a wide variety of security products, spanning endpoint, network, identity, email, cloud and more, as well as threat intelligence sources to further contextualize and enrich customers’ security data.

The data is ingested, at any scale necessary - terabyte, petabyte-scale - into a data warehouse in a structured way. If customers own a data lake then Hunters will directly ingest the security data into it, and if they don’t then the data will be directed to Hunters’ own data lake, powered by Snowflake. The raw data is accessible for the customer in an easy to query manner too.

The data is then normalized into a data schema that allows the platform to interpret different telemetry, which facilitates stronger detection.

You can see the full list of integrations here

Behind the Scenes of Ingestion: How does the Magic Work?

While in the Hunters’ portal connecting to different data sources and starting the ingestion process is smooth and simple, behind that lies a complex data engineering process that truly allows to extend the usability of data across the attack surface.

Each security vendor that Hunters XDR integrates with has its own specifications and requirements for ingesting data, so having dedicated code infrastructure to support the data ingestion from each vendor seems inevitable. Given that the security stack of each of Hunters’ customers is wide, and varied, the heavy-lifting involved with this process would simply not allow for scale: nor our customers’ in terms of data usability, not ours as a company.

In order to deal with this growing challenge, Hunters’ ingestion engineering team developed what we internally call “Generic Collectors” in order to deal with data ingestion at scale.

Let’s take a closer look at this concept.

"Generic Collectors"

“Generic Collectors” are built in a way that they can support as many security tools as possible, standardizing the ingestion process across multiple tools.

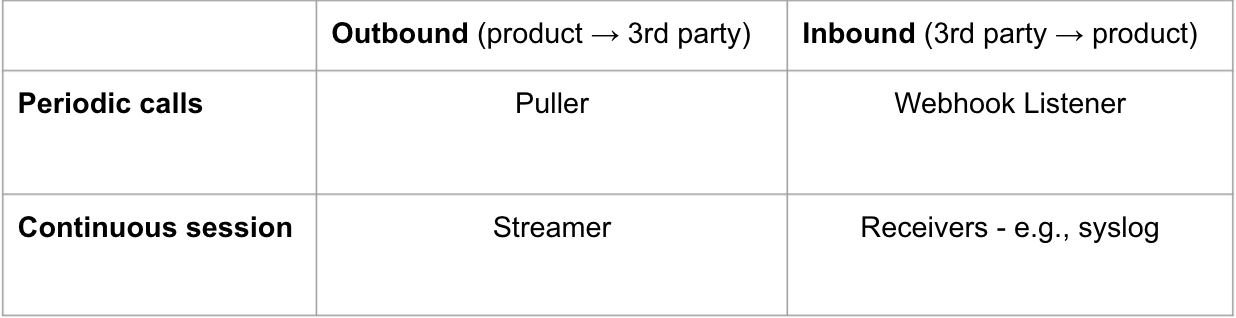

The first step for understanding the methodology is breaking down the different generic groups that security products can be classified into:

Outbound refers to when we need to initiate the connection with the vendor to pull the users' data. This may happen periodically and it may happen continuously (e.g., when subscribing to a vendor's feed is needed).

Another case is when the vendor initiates the connection to us, shipping events or logs. It may happen periodically and then the solution is some sort of a listener that always pays attention to whether there is data being shipped. This may also happen continuously and then there are receivers such as syslog that always work on receiving data.

Let’s now see what happens when the security vendors require an outbound connection with periodic calls, which is the most common case. For those, we use what we call “Pullers”.

"Puller Collectors"

There are many known use cases of security tools that require outbound connections with periodic calls: Sophos, Cyren, Meraki, Zoom, and many others.

Illustration of Puller execution flow, triggered by a scheduler

Let’s explain the concept of “Pullers” by using the example of Sophos Central ingestion.

There is a clock (scheduler) that periodically initiates the Generic Puller instance. The timing depends on the vendor that the data is pulled from. Specifically for Sophos it runs every 15 minutes. After the initiation, the instance connects to the vendor’s interface. Sophos' interface is a central API that exposes two different data types: alerts and events.

When the instance receives the data from the vendor, it writes it to Hunters’ storage. The main thing then that is left to do is to normalize the data and insert it to the Hunters’ detection pipeline, running advanced analytics and providing insights to the customer.

There are many benefits of using this kind of ingestion method: Time-savings, savings in compute power, removing the data engineering heavy lifting, and of course the ability to add new data sources that increase the threat detection coverage.

"Puller Collectors" Abstraction

It probably sounds obvious that a “collector” should just collect data and move it to the next step of the pipeline, but this simple task of collecting is more complicated than that.

A collector should do two things:

- Pulling - There are a lot of questions while designing the pulling action. Do we want to pull a snapshot of data? Maybe we want to query an index of events? Or perhaps we want to query by start and end time?

- Loading - This is the act of persisting received data to a designated place. For example, at Hunters most of the collectors will persist the data into a S3 bucket, and after that it will be loaded into Snowflake.

Loading is straightforward: simply write data to a specific place.

The pulling layer is the one to talk about. A general pulling layer will perform the following steps:

- Read current state (for example: latest query time).

- Connect to the vendors’ end

- Call the endpoint and get the results

- Update the state (saving the new latest query time, which is the present)

- Persist the results (write them to a storage)

So for example, if we want to collect by time, we would read the last queried time, generate the new end time (which would be now), send these arguments to the endpoint, and it will return the results and the strategy will update its state. This logic is true whether the puller is triggered every 30 seconds or once a day.

Conclusion

Flexible Ingestion is key to support a fully open, extended detection and response program as it gives the ability to connect the entire security stack of an organization and subsequently normalize the data to make it usable and start deriving insights from it.

With Hunters XDR, customers can:

- Break data silos and ingest all available data

- Lift and shift security telemetry to a modern data cloud

- Apply structure to raw data for effective correlation, search and investigation

- Apply cost-effective, always-available hot storage of data for rapid incident investigation

Read our previous blog post of the Open XDR - Under the Hood Series: Threat Investigation & Scoring, and stay tuned for next one which will cover Hunters’ Detection Engine.

Also, our Ingestion team is hiring! If you want to work on solving complex and exciting challenges in Ingestion, you can apply here.